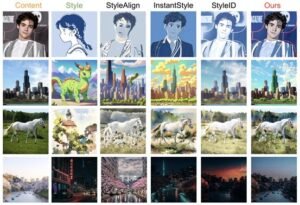

To develop the visual language of animated films, this study examines the process of identifying and extracting aesthetic elements from comprehensive material painting images. The study demonstrates that, due to the diversity of materials and the episodic nature of its development, comprehensive material painting offers a distinctive visual impact and artistic expression. A graph convolutional network-based layout perception method is proposed to address the classic convolutional neural network’s limitation in capturing an image’s overall layout information when assessing aesthetics. A novel adjacency matrix is constructed by incorporating dependencies between spatial distance information and regional features, enabling comprehensive perception of image layout. Experimental results show that the proposed LARC-CNN model significantly improves the accuracy of aesthetic quality binary classification, aesthetic score regression, and aesthetic score distribution prediction. It also performs well in evaluating aesthetic quality on the AVA dataset. Furthermore, by analyzing the works of artists like Gu Wenda and Hu Wei, the research highlights the diversity and creativity of ink fused with other materials, exploring the use of ink elements in modern mixed-media paintings. Through the appropriation and recombination of materials, these mixed-media paintings not only retain the essence of traditional ink and wash painting but also enhance the visual and artistic impact of the artwork.

Due to its distinctive artistic expression and rich material vocabulary, mixed-media painting has steadily gained prominence as a contemporary art genre [1]. Unlike traditional painting, mixed-media painting expands the possibilities for artistic expression and offers the viewer a new visual experience by integrating multiple media and using innovative techniques. Mixed-media painting holds a unique place within the framework of modern art because of its originality and diversity, especially in today’s world where multiculturalism and globalization are intertwined [2]. However, a new challenge and area of research lies in extracting the aesthetic elements of this art form and applying them to other artistic mediums, such as the visual language of animated films.

Mixed-media paintings are characterized by the fusion of diverse materials, including acrylic, emulsion, wormwood, metal, plaster, and more, resulting in artworks with layered expression and a powerful visual impact [3,4]. For example, as shown in Figures 1 and 2, Kiefer’s “Serpent of Paradise” and “Ancient Woman” use materials like sand, clay, and steel to create a harsh and cold visual effect, while simultaneously incorporating warm, soft elements like raindrops and a little girl’s skirt to form a striking visual contrast. This versatile creative approach has informed the development of visual language in animated films.

The visual impact and experience of animated films are largely shaped by their image language, a key component of audiovisual art. A thorough investigation into incorporating the artistic qualities of mixed-media painting into the visual language of animated films is essential to preserve the work’s theme and content while enhancing its visual quality [5,6]. For instance, Hungarian animation director Péter Vácz’s 2013 animated short film Rabbit and Deer (shown in Figures 3 and 4) utilizes techniques such as “insertion,” “collage,” and “combination” from mixed-media painting to alternate between two- and three-dimensional worlds. These techniques effectively convey the characters’ struggles and conflicts with their own identities and the outside world, contributing to the animation’s artistic depth and visual appeal.

While mixed-media painting has been widely used in the visual arts, significant challenges remain in successfully extracting its aesthetic qualities for application in animated films. First, the material language of mixed-media paintings is highly variable and episodic, requiring further research to determine how these qualities can be represented in animated films while achieving a balanced integration. Secondly, the visual elements of mixed-media paintings must harmonize closely with the plot and composition of animated films [7,8]. Additionally, current deep learning-based techniques for evaluating image aesthetics primarily rely on convolutional operations, which often struggle to capture an image’s overall layout information, impacting the accuracy of aesthetic quality evaluation.

Recent advances have been made in deep learning-based aesthetic evaluation techniques. For example, the A-Lamp model proposed in [9] encodes the global compositional information of an image by selecting multiple image blocks from the original image using object detection and creating attribute-relationship maps of these regions. However, this approach has limitations in layout perception, particularly for images lacking prominent objects. Furthermore, many systems apply stretching or cropping when processing images, which can distort the original layout and reduce aesthetic quality [10].

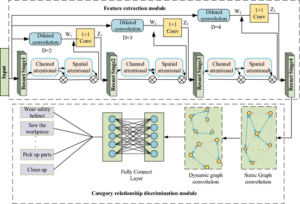

To address these limitations, this study proposes a graph convolutional network-based layout perception technique that achieves a comprehensive perception of image layout by modeling the interdependencies among local parts of an image. Specifically, this study uses a graph convolutional network for learning and reasoning to represent an image’s layout information as dependencies between its local regions, enhancing the model’s capacity for aesthetic learning [11]. Based on this, a new model called LARC-CNN is developed for aesthetic quality evaluation and validated using the AVA dataset. Experimental results show that the model performs exceptionally well in binary aesthetic quality classification, aesthetic score regression, and aesthetic score distribution prediction tasks.

An image’s overall layout significantly impacts its aesthetic quality. The approach presented in this chapter aims to enhance aesthetic learning and improve the performance of aesthetic quality evaluation by enabling the perception of an image’s layout information. Specifically, this chapter models an image’s layout information as dependencies among its local regions [12]. Graph convolutional networks, which are well-suited for learning complex relationships between image regions, are employed to perform dependency inference, facilitating layout perception. Conventional convolution operations in convolutional neural networks are often inefficient in capturing relationships between regions that are distant in coordinate space.

Figure 5 illustrates the detailed architecture of the graph-convolution layout-aware subnetwork. The fully convolutional network (FCN) is used as the backbone network to encode the regional features of an input image, which is stretched and preprocessed to a fixed size. This process results in a feature map with dimensions \( W \times H \times D \). Because the FCN encoding process preserves the spatial information of the original image, the feature vectors at each spatial location can be stacked into a matrix based on the obtained feature map to produce the region feature representation \( F = \left[ f_1, f_2, \dots, f_N \right] \in \mathbb{R}^{N \times D} \), where \( D \) denotes the dimensionality of the features and \( N = W \times H \) indicates the number of region features.

Next, using this region feature representation, a fully connected graph \( \text{Graph} = (V, E) \) is created to model the dependencies between regions. The nodes in the graph, representing the respective region features, are numbered \( N \), with \( V = \{ f_1, f_2, \dots, f_N \} \). Then, using the similarity function \( s(f_i, f_j) \), the semantic content similarity between any two nodes is computed, assigning a weighted edge between nodes to characterize the dependencies between regions, where \( 1 \leq i, j \leq N \). This chapter measures feature similarity \( s(f_i, f_j) \) using the vector inner product, and defines the similarity function as follows:

\[\begin{equation} s(f_i,f_j)=\varphi(f_i)^T\psi(f_i)^I , \end{equation}\tag{1}\] where \(\varphi \left(f_{i} \right)=Af_i, \psi(f_i)=Bf_i, \, A,B\epsilon \square^{(D \times D}\)is a learnable parameter that may be enhanced via back propagation, and the model’s generalization can be strengthened by linearly transforming the eigenvectors [13,14]. The matrix \(Sim\in \square^{(N \times N))}\) is produced once the weights between each pair of nodes have been calculated.The Softmax function is then used to normalize each column element in the \(Sim\) matrix in accordance with the work in the literature [14]: \[ Sim_{i,j} =\frac{\exp \left(s\left(f_{i} ,f_{j} \right)\right)}{\sum _{k=1}^{N}\exp \left(s\left(f_{k} ,f_{j} \right)\right)} .\tag{2}\]The exponential function is represented by \( \exp(\cdot) \). After normalization using the Softmax function, the total weight of the edges connecting to each node in the network \( \text{Graph} \) is 1. This normalized graph is represented by the matrix \( \text{Sim} \). The dependencies among the nodes in the graph are described by the adjacency matrix of \( \text{Graph} \).

To capture semantic content similarity across different areas, each element in the Sim matrix calculates similarity between region features using an inner product, thus completing the modeling of inter-region relationships. However, beyond dependencies based on semantic content similarity, spatial composition information of visual elements is also a critical feature that affects the layout and aesthetics of an image [16]. As previously mentioned, the method proposed in this chapter, like most current methods, preprocesses the input image to a fixed size before the FCN feature encoding operation. This preprocessing uses a stretching operation, which helps preserve the image layout to some extent but inevitably alters the spatial composition of the image’s visual elements and changes the spatial distances between different regions, thereby affecting the image’s original layout.

After applying the FCN convolution operation to the preprocessed image, Figure 6 illustrates the spatial relationships between different regions in the regional feature representation F. The spatial distances between the nodes, which define the local regions, are as follows:

\[

d^{‘}\left(f_{i} ,f_{j} \right)=\sqrt{x_{i,j}^{2} +y_{i,j}^{2}

} ,\tag{3}\]

where \( x_{i,j} \in \{ 0,1,\dots,W-1 \} \) and \( y_{i,j} \in \{ 0,1,\dots,H-1 \} \) represent the horizontal and vertical distances, respectively, between nodes \( f_i \) and \( f_j \). Since the CNN model typically accepts square data as input, \( W = H \) in general. The graphic clearly shows that the stretching preprocessing introduces a discrepancy between this spatial relationship and the spatial relationship in the original image. To address this issue, this chapter adjusts the spatial distances between regions using the aspect ratio information from the original image. This spatial information is then embedded into the region relation map \( \text{Graph} \) to preserve as much of the image’s original structure as possible. Letting \( h \) and \( w \) represent the height and width of the original image, respectively, and \( \text{Aspect\_ratio} = \frac{w}{h} \), the original spatial distance between the nodes is: \[

d\left(f_{i} ,f_{j} \right)=\left\{\begin{array}{l} {\sqrt{\left(x_{i,j} \times {\rm Aspect}_{{\rm r}} \right)^{2} +y_{i,j}^{2}, } {\quad Aspect_ r}\le {\rm 1}} \\ {\sqrt{{\rm x}_{{\rm i},{\rm j}}^{{\rm 2}} {\rm +}\left(\frac{{\rm y}_{{\rm i},{\rm j}} }{{\rm Aspect}_{{\rm r}} } \right)^{{\rm 2}} },\quad \quad\quad\quad {\rm Aspect}{\rm _r }>{\rm 1}}\end{array}\right. .\tag{4}\] The matrix \(D\,\,is \in\square^{N\times N}\) characterizing the original spatial distance relationship between nodes can be obtained through Eq. (4). Similarly, each column element in the \(D\) matrix is normalized using the Softmax function: \[

Dis_{i,j} =\frac{\exp \left(d\left(f_{i} ,f_{j} \right)\right)}{\sum

_{k=1}^{N}\exp \left(d\left(f_{k} ,f_{j}

\right)\right)} .\tag{5}\] The original aspect ratio is preserved by integrating the spatial composition information into the region dependency map \(Graph\) to create the new adjacency matrix \(A\in \square^{N\times N} \).

At this stage, the weights of the edges connecting nodes in the graph

Graph incorporate both the spatial

distance relationships between them and the semantic content similarity

between areas, aligning with the original image structure. This process

completes the construction of the dependency graph. As previously

mentioned, Graph is a fully connected

graph, and Figure 6 illustrates only partial connections to highlight

the strong relationships between nodes for clarity. To further verify the effectiveness and superiority of the proposed

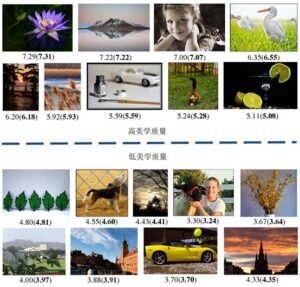

LARC-CNN model, this subsection quantitatively compares it with several

current state-of-the-art approaches. The model includes three graph

convolutional layers, with the number of loop connections T set to two, and the neural layers

with additional loop connections in the set R are set to {conv5_2,\,conv4_2,\,conv3_2}. The results of

the comparison experiments are shown in Table 1. These parameter

choices are informed by the analysis and experimental findings presented

in subsection 2. The LARC-CNN model presented in this chapter achieves competitive

results in three key areas: binary classification of aesthetic quality,

regression of aesthetic scores, and prediction of aesthetic score

distributions, as shown by comparing the aesthetic quality evaluation

models in Table 1. Unlike image block input-based methods such

as RAPID, DMA-Net, MNA-CNN, A-Lamp, MPada, and GPF-CNN, the proposed

network framework directly learns discriminative features from the

entire image, bypassing the need for labor-intensive image block

sampling and result aggregation. This approach leads to higher

prediction accuracies. Specifically, the A-Lamp method encodes the spatial layout of an

image using a manually designed aggregation structure and a complex

image block sampling strategy. Its layout perception relies on learning

the relationships between prominent regions, which must be specified in

advance and affects performance based on the extraction of these

regions. In contrast, the LARC-CNN proposed in this chapter models the

interdependence of visual elements at the feature level, achieving

automatic end-to-end layout perception and aesthetic prediction via

graph convolution. This approach improves the accuracy of binary

classification of aesthetic quality by 1.18% over the A-Lamp method. One MNA-CNN approach uses Spatial Pyramid Pooling (SPP) [17] to process images at their

original size and aspect ratio, recognizing that preprocessing images

through stretching, cropping, or padding distorts their composition.

While effective, this approach does not explicitly model visual elements

or their interdependencies as thoroughly as the approach in this

chapter, which improves the LARC-CNN model’s accuracy by 6.28%. This

method aims to preserve compositional information in an aesthetic

model. For the aesthetic score regression task, Pool-3FC method performed

best on SRCC and PLCC by directly regressing on the mean aesthetic

score, although this limits prediction accuracy by omitting variance and

maximum values in the score distribution. However, Pool-3FC’s use of raw

resolution images with preserved aspect ratios and layouts significantly

slows model training and inference. The LARC-CNN model outperforms other compatible batch-trained

methods, including MTRLCNN, NIMA, ILGNet, PA_IAA, and ADFC, across all

three aspects of the aesthetic quality evaluation task. It achieves

improvements in binary prediction of aesthetic quality by 4.60%, 2.17%,

1.02%, and 0.78%, respectively, and further improves accuracy by 0.44%,

0.85%, and 2.78% in other metrics. In conclusion, the proposed LARC-CNN

model effectively perceives image layout and performs efficient

aesthetic learning, yielding more accurate assessments of aesthetic

quality across three dimensions. Sample images from the AVA test set,

along with their model-predicted results, are displayed in Figure 7. Chinese ink and wash elements are frequently incorporated into

contemporary composite material paintings, where the fusion of ink with

various materials serves as a primary means of expression. Gu Wenda’s

The Lost Dynasty – The Modern Meaning of Totems and Taboos is a

notable example of mixed-media painting, combining multiple materials

with innovative ink and wash techniques as its central feature. Instead

of using traditional Chinese ink techniques such as “hook,

strangulation, chafing, and dotting” to convey ideas, this work utilizes

ink as the primary medium, supplemented by acrylic and gouache.

Additionally, the piece interprets historical divergence from reality

through the use of intentionally misspelled words on a large-format

newspaper (Figure 8). For example, to enhance the artistic appeal of his paintings, Mr. Hu

Wei incorporated additional materials alongside ink and wash as the

primary medium in his composite material artworks. This approach

resulted in the piece titled Impression of Sima (Figure 9). The Late Tang ink painting technique is applied using

various types of paper and mineral pigments to create the piece Sima

Impressions, lending the artwork a sense of “harmony” through this

blend of materials. Additionally, the natural bond between ink and

mineral pigments in these paintings provides viewers with an atmospheric

experience, enhancing their appreciation of the unique qualities of ink.

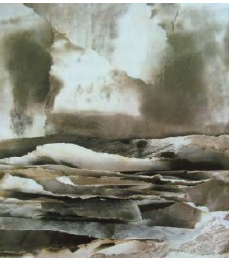

For example, in Chen Shouyi’s piece Landscape, rolling river

waves are accentuated using rice paper and ink as primary media,

combined with additional materials. The ink collage, with various

textures from inked rice paper, clay paper, and watercolor paper,

enriches the natural expression of the scene, better reflecting the

image’s texture (Figure 10). One of the primary ways Chinese ink elements are expressed in

mixed-media paintings is through the appropriation of ink imagery. For

instance, the use of traditional motifs, such as fish, water, pavilions,

and insects, can enhance the artistic quality of mixed-media paintings.

For example, in Qiu Deshu’s Fission – Soul Series, the visual

appeal is elevated by incorporating Chinese ink and wash elements

(Figure 11). This mixed-media artwork was created by tearing rice paper collage to

depict landscapes such as mountains and rivers. Unlike traditional

works, the outline of the mountains is formed using shredded paper

rather than ink and brush. At the same time, the dots and washes in the

pictorial style are crafted with acrylic paint, a relatively modern

technique that draws the viewer’s attention [18,19]. For example, Shang Yang’s mixed-media

painting Dong Qichang Project 2 directly appropriates

traditional landscape imagery without using ink and wash materials

(Figure 12). This piece appropriates the traditional Chinese ink motif of the

“mountain” image. However, in terms of materials, the airbrush technique

combined with oil and acrylic paints enhances the depth of the scene,

allowing for a more fluid representation of the near, middle, and

distant views of the rolling hills and rivers. An example of ink and

watercolor appropriation can be seen in Chen Junhao’s replica of Fan

Kuan’s Traveling in the Mountains from the Northern Song

Dynasty, created with mosquito nails. This study presents a method for assessing the aesthetic quality of

images based on graph convolution networks and thoroughly discusses the

aesthetic aspects of composite material painting and its use in the

visual language of animated films. We summarize its distinctive

aesthetic qualities in visual art—material diversity, visual contrast,

texture richness, etc.—through the analysis and case study of material

language in comprehensive material painting, offering a crucial resource

for the development of animation film picture language. In order to

obtain a thorough understanding of the image layout, we present a novel

approach to layout perception in this paper that uses graph

convolutional networks to describe the local inter-region dependencies

of an image. We developed the LARC-CNN model, which assesses aesthetic

quality, using this methodology, and it performed exceptionally well in

experimental validation using the AVA dataset. This paper is a phased research achievement of the project

“Construction and Practice of Contemporary Comprehensive Material

Painting Aesthetic System,” funded by the Humanities and Social Sciences

Research Planning Fund of the Ministry of Education [project approval

number: 23YJA760104].3. Experimental Results and

Analysis

Method

ACC

SRCC

PLCC

RMSE

EMD

RAPID

74.45

–

–

–

–

DMA-Net

75.52

–

–

–

–

MNA-CNN

78.89

–

–

–

–

MTRLCNN

78.85

–

–

–

–

A-Lamp

82.24

–

–

–

–

NIMA

81.16

0.625

0.634

–

0.05

MPada

82.32

–

–

–

–

GPF-CNN

80.56

0.674

0.532

0.532

0.045

ILGNet

82.55

–

–

–

–

PA_IAA

82.88

0.067

–

–

0.045

AFDC

83.24

0.65

–

0.521

–

LARC-CNN

83.26

0.712

0.725

0.508

0.045

4. Expression

of Ink Elements in Composite Material Painting

5. Conclusion

Fund support